SELECTED PUBLICATIONS

The Space Bender: Supporting Natural Walking via Overt Manipulation of the Virtual Environment

Adalberto Simeone, Niels Christian Nilsson, André Zenner, Marco Speicher, and Florian Daiber

In IEEE Conference on Virtual Reality and 3D User Interfaces (VR). IEEE Conference on Virtual Reality and 3D User Interfaces (VR-2020), IEEE, 2020.

VR, Locomotion, Natural Walking Techniques

The Space Bender is a natural walking technique for room-scale VR. It builds on the idea of overtly manipulating the Virtual Environment by “bending” the geometry whenever the user comes in proximity of a physical boundary. We compared the Space Bender to two other similarly situated techniques: Stop and Reset and Teleportation, in a task requiring participants to traverse a 100 m path. Results show that the Space Bender was significantly faster than Stop and Reset, and preferred to the Teleportation technique, highlighting the potential of overt manipulation to facilitate natural walking.

LIVE: the Human Role while Learning in an Immersive Virtual Environment

Adalberto L. Simeone, Marco Speicher, Andreea Molnar, Adriana Wilde, and Florian Daiber

In Proceedings of the Symposium on Spatial User Interaction (SUI '19). ACM, 2019.

Eye Tracking, Mobile Interaction, Gaze Interaction, Error Model, Error-Aware

This work studies the role of a human instructor within an immersive VR lesson. Our system allows the instructor to perform "contact teaching" by demonstrating concepts through interaction with the environment, and the student to experiment with interaction prompts. We conducted a between-subjects user study with two groups of students: one experienced the VR lesson while immersed together with an instructor; the other experienced the same contents demonstrated through animation sequences simulating the actions that the instructor would take. Results show that the Two-User version received significantly higher scores than the Single-User version in terms of overall preference, clarity, and helpfulness of the explanations. When immersed together with an instructor, users were more inclined to engage and progress further with the interaction prompts, than when the instructor was absent. Based on the analysis of videos and interviews, we identified design recommendations for future immersive VR educational experiences.

Slackliner - An Interactive Slackline Training Assistant

Felix Kosmalla, Christian Murlowski, Florian Daiber, and Antonio Krüger

In Proceedings of the 26th ACM international conference on Multimedia (MM '18). ACM, 2018.

Slackline, sports technologies, projection, real-time feedback

In this paper we present Slackliner, an interactive slackline training assistant which features a life-size projection, skeleton tracking and real-time feedback. As in other sports, proper training leads to a faster buildup of skill and lessens the risk for injuries. We chose a set of exercises from slackline literature and implemented an interactive trainer which guides the user through the exercises and gives feedback if the exercise was executed correctly.

A post analysis gives the user feedback about her performance. We conducted a user study to compare the interactive slackline training system with a classic approach using a personal trainer. No significant difference was found between groups regarding balancing time, number of steps and the walking distance on the line for the left and right foot. Significant main effects for the balancing time on line, without considering the group, have been found. User feedback acquired by questionnaires and semi-structured interviews was very positive. Overall, the results indicate that the interactive slackline training system can be used as an enjoyable and effective alternative to classic training methods.

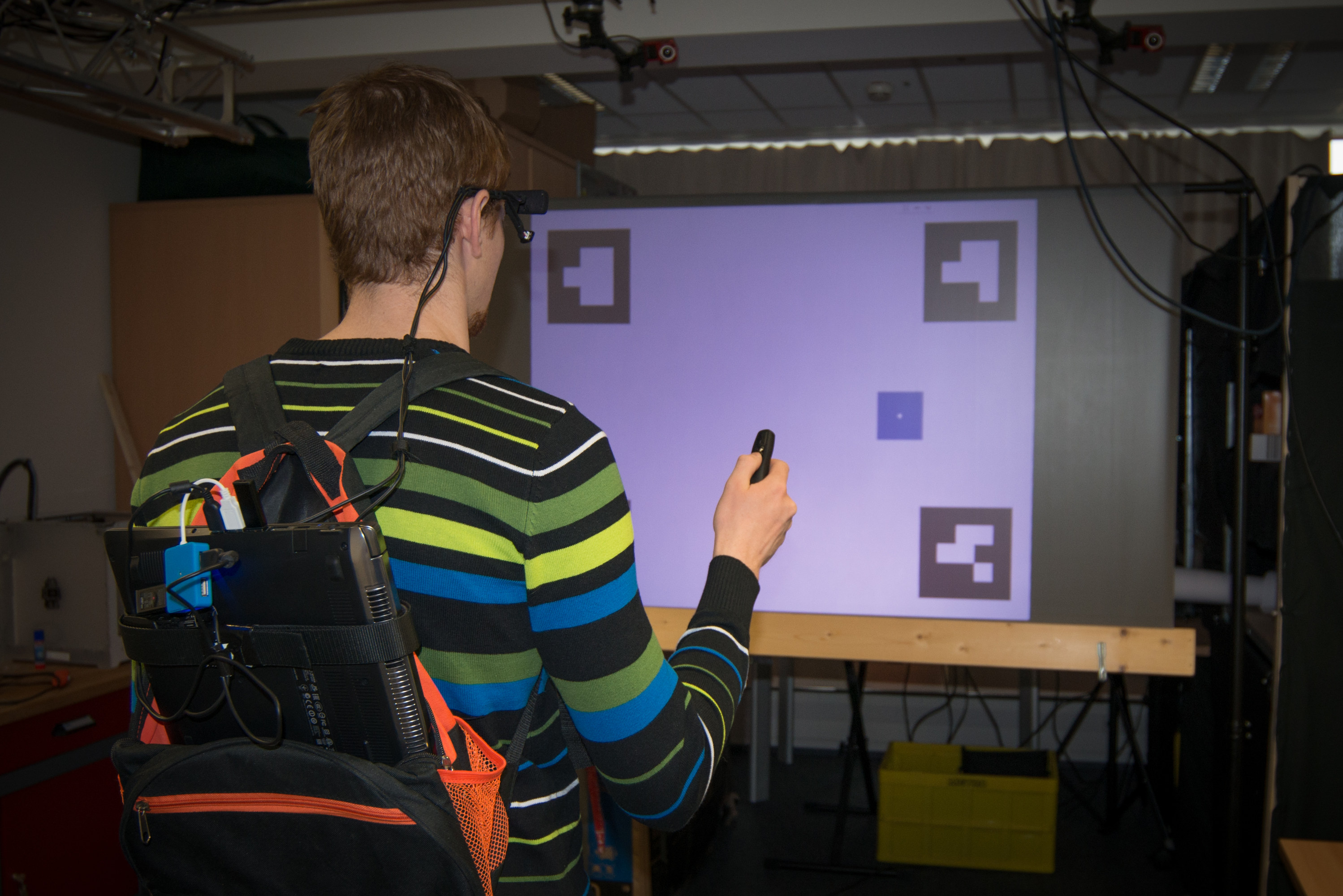

Error-aware gaze-based interfaces for robust mobile gaze interaction

Best Paper Award

Michael Barz, Florian Daiber, Daniel Sonntag, and Andreas Bulling

In Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications (ETRA '18). ACM, 2018.

Eye Tracking, Mobile Interaction, Gaze Interaction, Error Model, Error-Aware

Gaze estimation error can severely hamper usability and performance of mobile gaze-based interfaces given that the error varies constantly for different interaction positions. In this work, we explore error-aware gaze-based interfaces that estimate and adapt to gaze estimation error on-the-fly. We implement a sample error-aware user interface for gaze-based selection and different error compensation methods: a naïve approach that increases component size directly proportional to the absolute error, a recent model by Feit et al. that is based on the two-dimensional error distribution, and a novel predictive model that shifts gaze by a directional error estimate. We evaluate these models in a 12-participant user study and show that our predictive model significantly outperforms the others in terms of selection rate, particularly for small gaze targets. These results underline both the feasibility and potential of next generation error-aware gaze-based user interfaces.

FootStriker: An EMS-based Foot Strike Assistant for Running

Mahmoud Hassan, Florian Daiber, Frederik Wiehr, Felix Kosmalla, and Antonio Krüger

Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 1, 1, Article 2 (March 2017), 18 pages.

Electrical Muscle Stimulation, Wearable Devices, Wearables, Real-time Feedback, Motor Skills, Motor Learning, Sports Training, Running, In-situ Feedback, Online Feedback, Real-time Assistance

In running, knee-related injuries are very common. The main cause are high impact forces when striking the ground with the heel rst. Mid- or forefoot running is generally known to reduce impact loads and to be a more e cient running style. In this paper, we introduce a wearable running assistant, consisting of an electrical muscle stimulation (EMS) device and an insole with force sensing resistors. It detects heel striking and actuates the calf muscles during the ight phase to control the foot angle before landing. We conducted a user study, in which we compared the classical coaching approach using slow motion video analysis as a terminal feedback to our proposed real-time EMS feedback. The results show that EMS actuation signi cantly outperforms traditional coaching, i.e., a decreased average heel striking rate, when using the system. As an implication, EMS feedback can generally be bene cial for the motor learning of complex, repetitive movements.

Virtual Reality Climbing: Exploring Rock Climbing in Mixed Reality Environments

Felix Kosmalla, André Zenner, Marco Speicher, Florian Daiber, Nico Herbig, and Antonio Krüger

In Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems (CHI '17)

Passive Haptic Feedback, Rock Climbing, Mixed Reality, Virtual Reality

While current consumer virtual reality headsets can convey a strong feeling of immersion, one drawback is still the missing haptic feedback when interacting with virtual objects. In this work, we investigate the use of a artificial climbing wall as a haptic feedback device in a virtual rock climbing environment. It enables the users to wear a head-mounted display and actually climb on the physical climbing wall which conveys the feeling of climbing on a large mountain face.

ClimbSense - Automatic Climbing Route Recognition using Wrist-worn Inertia Measurement Units

Felix Kosmalla, Florian Daiber, Antonio Krüger

In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM International Conference on Human Factors in Computing Systems, ACM, 2015.

Climbing, Sports Technologies, Inertial Sensors, Machine Learning

Today, sports and activity trackers are ubiquitous. Especially runners and cyclists have a variety of possibilities to record and analyze their workouts. In contrast, climbing did not find much attention in consumer electronics and human-computer interaction. If quantified data similar to cycling or running data were available for climbing, several applications would be possible, ranging from simple training diaries to virtual coaches or usage analytics for gym operators. This paper introduces a system that automatically recognizes climbed routes using wrist-worn inertia measurement units (IMUs). This is achieved by extracting features of a recorded ascent and use them as training data for the recognition system. To verify the recognition system, cross-validation methods were applied to a set of ascent recordings that were assessed during a user study with eight climbers in a local climbing gym. The evaluation resulted in a high recognition rate, thus proving that our approach is possible and operational.

Hoverspace

Paul Lubos, Oscar Ariza, Gerd Bruder, Florian Daiber, Frank Steinicke, Antonio Krüger

In: Julio Abascal; Simone Barbosa; Mirko Fetter; Tom Gross; Philippe Palanque; Marco Winckler (Hrsg.). Human-Computer Interaction – INTERACT 2015. Pages 259-277, Lecture Notes in Computer Science (LNCS), Vol. 9298, ISBN 978-3-319-22697-2, Springer International Publishing, 2015.

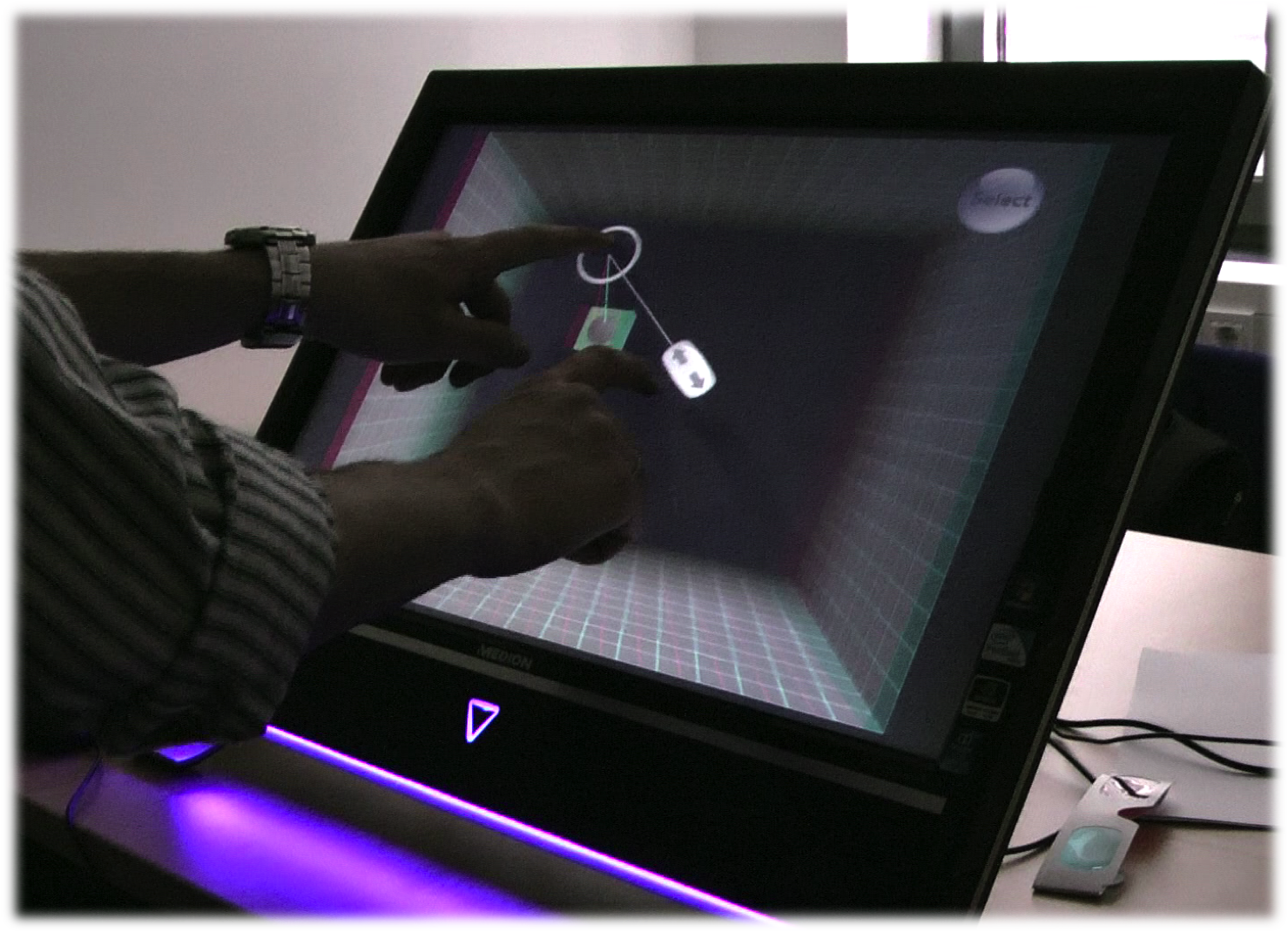

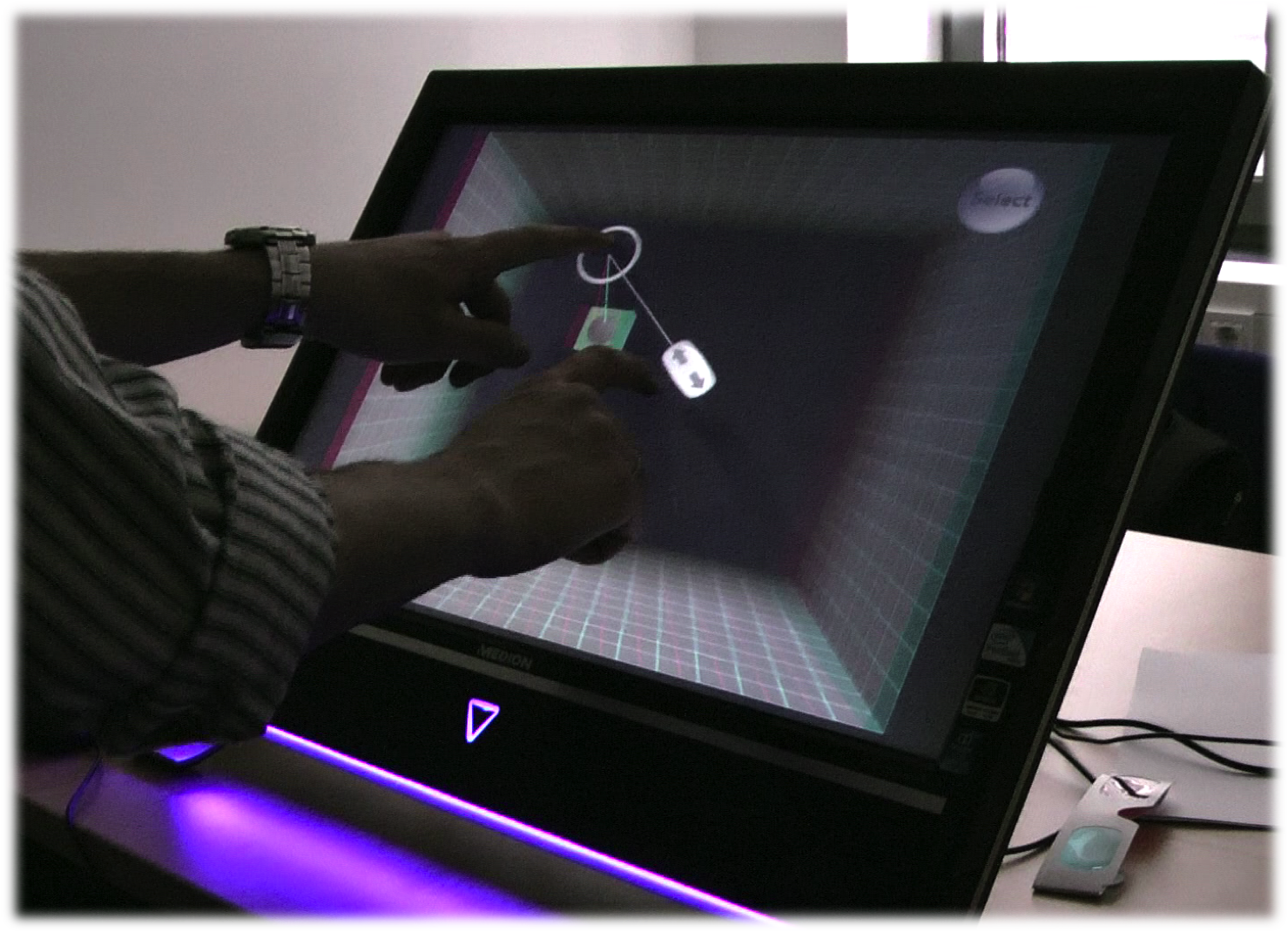

Hover Space, Touch Interaction, Stereoscopic Displays, 3D Interaction

Recent developments in the area of stereoscopic displays and tracking technologies have paved the way to combine touch interaction on interactive surfaces with spatial interaction above the surface of a stereoscopic display. This holistic design space supports novel affordances and user experiences during touch interaction, but also induce challenges to the interaction design. In this paper we introduce the concept of hover interaction for such setups. Therefore, we analyze the non-visual volume above a virtual object, which is perceived as the corresponding hover space for that object. The results show that the users’ perceptions of hover spaces can be categorized into two groups. Either users assume that the shape of the hover space is extruded and scaled towards their head, or along the normal vector of the interactive surface. We provide a corresponding model to determine the shapes of these hover spaces, and confirm the findings in a practical application. Finally, we discuss important implications for the development of future touch-sensitive interfaces.

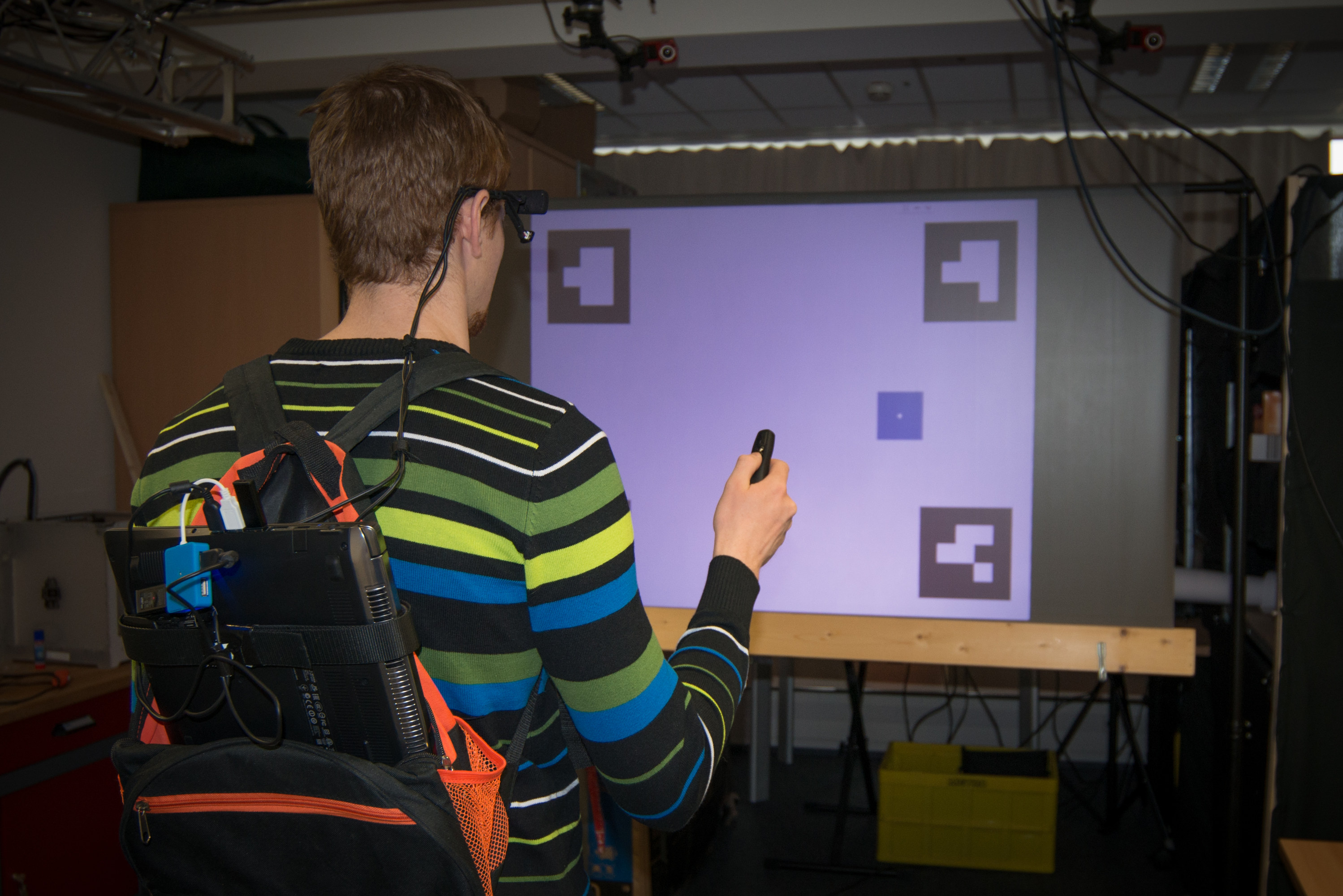

Interacting with 3D Content on Stereoscopic Displays.

Florian Daiber, Marco Speicher, Sven Gehring, Markus Löchtefeld, Antonio Krüger

In: Proceedings of the International Symposium on Pervasive Displays. International Symposium on Pervasive Displays. Pages 32:32--32:37, ACM, 2014.

Spatial Interaction, Gestural Interaction, Mobile Interaction, 3D Travel, Large Displays, Media Facades

Along with the number of pervasive displays in urban environments, recent advances in technology allow to display three-dimensional (3D) content on these displays. However, current input techniques for pervasive displays usually focus on interaction with 2D data. To enable interaction with 3D content on pervasive displays, we need to adapt existing and create novel interaction techniques. In this paper we investigate remote interaction with 3D content on pervasive displays. We introduce and evaluate four 3D travel techniques that rely on well established interaction metaphors and either use a mobile device or depth tracking as spatial input. Our study on a large-scale stereoscopic display shows that the physical travel techniques outperformed the virtual techniques with respect to task performance time and error rate.

Is Autostereoscopy Useful for Handheld AR?

Best Paper Nominee

Frederic Kerber; Pascal Lessel; Michael Mauderer; Florian Daiber; Antti Oulasvirta; Antonio Krüger

In: Proceedings of the 12th International Conference on Mobile and Ubiquitous Multimedia. ACM, 2013.

Autostereoscopy, mobile devices, depth discrimination, empirical and quantitative user study, augmented reality

Some recent mobile devices have autostereoscopic displays that enable users to perceive stereoscopic 3D without lenses or filters. This might be used to improve depth discrimination of objects overlaid to a camera viewfinder in augmented reality (AR). However, it is not known if autostereoscopy is useful in the viewing conditions typical to mobile AR. This paper investigates the use of autostereoscopic displays in an psychophysical experiment with twelve participants using a state-of-the-art commercial device. The main finding is that stereoscopy has a negligible if any effect on a small screen, even in favorable viewing conditions. Instead, the traditional depth cues, in particular object size, drive depth discrimination.

Interactive Surfaces for Interaction with Stereoscopic 3D (ISIS3D): Tutorial and Workshop at ITS 2013

Florian Daiber; Bruno Rodrigues De Araujo; Frank Steinicke; Wolfgang Stuerzlinger

In: Proceedings of the 2013 ACM International Conference on Interactive Tabletops and Surfaces. Pages 483-486, ACM, 2013.

Stereoscopic Displays, 3D User Interfaces and Interaction, Touch- and Gesture-based Interfaces, Adaptive and Perception-inspired Interfaces, Psychophysiological Studies related to Stereoscopy

With the increasing distribution of multi-touch capable de- vices multi-touch interaction becomes more and more ubiq- uitous. Multi-touch interaction offers new ways to deal with 3D data allowing a high degree of freedom (DOF) without instrumenting the user. Due to the advances in 3D technolo- gies, designing for 3D interaction is now more relevant than ever. With more powerful engines and high resolution screens also mobile devices can run advanced 3D graphics, 3D UIs are emerging beyond the game industry, and recently, first prototypes as well as commercial systems bringing (auto-) stereoscopic display on touch-sensitive surfaces have been proposed. With the Tutorial and Workshop on “Interactive Surfaces for Interaction with Stereoscopic 3D (ISIS3D)” we aim to provide an interactive forum that focuses on the chal- lenges that appear when the flat digital world of surface com- puting meets the curved, physical, 3D space we live in.

Designing Gestures for Mobile 3D Gaming

Florian Daiber; Lianchao Li; Antonio Krüger

In: Proceedings of the 11th International Conference on Mobile and Ubiquitous Multimedia. ACM, 2012.

3D User Interfaces, Gestural Interaction, Mobile Interaction, Mobile Gaming, Stereoscopic Display

In the last years 3D is getting more and more popular. Besides the increasing number of movies for 3D stereoscopic cinemas and television, serious steps have also been undertaken in the field of 3D gaming. Games with stereoscopic 3D output are now available not only for gamers with high-end PCs but also on handheld devices equipped with 3D autostereoscopic displays. Recent smartphone technology has powerful processors that allow complex tasks like image processing, e.g. used in augmented reality applications. Moreover these devices are nowadays equipped with various sensors that allow additional input modalities far beyond joystick, mouse, keyboard and other traditional input methods. In this paper we propose an approach for sensor-based interaction with stereoscopic displayed 3D data on mobile devices and present a mobile 3D game that makes use of these concepts.

Balloon Selection revisited - Multi-touch Selection Techniques for Stereoscopic Data

Florian Daiber; Eric Falk; Antonio Krüger

In: Proceedings of the International Conference on Advanced Visual Interfaces. Pages 441-444, ACM, 2012.

3D User Interfaces, Gestural Interaction, Selection tech- niques, Stereoscopic Display

In the last years 3D is getting more and more popular. Besides the increasing number of movies for 3D stereoscopic cinemas and television, serious steps have also been undertaken in the field of 3D gaming. Games with stereoscopic 3D output are now available not only for gamers with high-end PCs but also on handheld devices equipped with 3D autostereoscopic displays. Recent smartphone technology has powerful processors that allow complex tasks like image processing, e.g. used in augmented reality applications. Moreover these devices are nowadays equipped with various sensors that allow additional input modalities far beyond joystick, mouse, keyboard and other traditional input methods. In this paper we propose an approach for sensor-based interaction with stereoscopic displayed 3D data on mobile devices and present a mobile 3D game that makes use of these concepts.